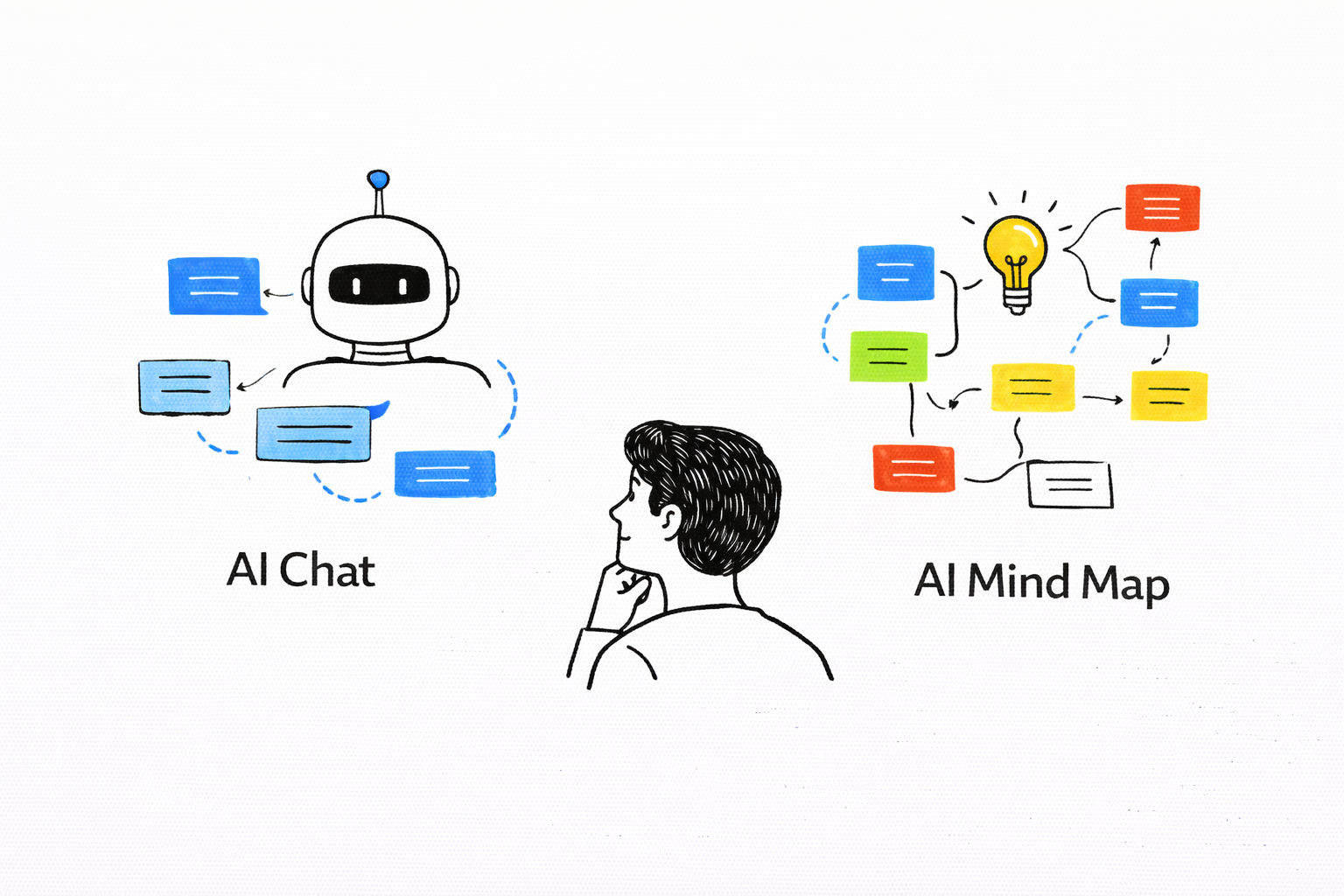

We are living through a quiet revolution in how we think, and the battleground is the interface. On one side, the familiar, conversational scroll of the AI chat window. On the other, the sprawling, interconnected canvas of an AI mind map. Both promise to augment our cognition, but they do so in fundamentally opposing ways. One presents thought as a linear narrative, a story told by the machine. The other presents thought as a spatial structure, a landscape to be explored and rearranged by the human.

This is more than a choice between tools; it’s a choice between cognitive models. In an age where we are drowning in information but starving for understanding, the medium through which we interact with intelligence determines the depth and quality of the insights we can forge. Does the comforting dialogue of a chat bot lead us to consume answers, or does the demanding structure of a visual map compel us to construct understanding?

The tension is ancient. Vannevar Bush, in his 1945 essay “As We May Think,” envisioned the “Memex,” a device for creating “associative trails” through information—a web of connections, not a linear file. Yet, our dominant AI interfaces today often default to producing digital monologues, elegant echoes of the printed page. We have machines capable of associative genius, but we often ask them to speak in paragraphs.

This exploration is not about declaring a winner, but about understanding the cognitive affordances of each form. It’s about recognizing that the best thinking is a phase-based craft, and that the most profound insights often emerge in the translation of ideas from one mode to another.

The Illusion of Dialogue and Its Cognitive Traps

The AI chat interface is a masterpiece of human-centered design. It mimics the most natural form of human knowledge exchange: conversation. You ask, it answers. You probe, it refines. This turn-based, sequential flow feels intuitive and responsive, making it excellent for exploring a single thread of thought in depth. It is the digital equivalent of a Socratic dialogue, perfect for debugging a line of code, role-playing a scenario, or iteratively refining a piece of text.

However, this very strength conceals a profound cognitive trap. The interface frames interaction as a problem-solution exchange, privileging the AI’s narrative over the user’s mental model. We receive answers, but we may not build our own map of the territory. The output is a “wall of text”—a linear scroll that obscures hierarchy, buries relationships, and encourages passive consumption. The chat’s structure implies that thinking is a sequence of statements, not a network of connections.

The chat interface is like having a brilliantly knowledgeable but monologuing tutor. You get the information, but you inherit their structure, not your own.

This linear format can increase cognitive load. Digesting a long, dense response requires the user to mentally parse, segment, and organize the information themselves—the very work the AI could be helping with. Research into learning techniques consistently shows that explicit structure reduces cognitive load, yet the default chat output often lacks this scaffolding. The interaction is “answer-oriented,” which can subtly shortcut our own essential process of discovery, connection-making, and sense-making.

Making Thinking Visible and Malleable

Contrast this with the cognitive model of an AI mind map. Here, thought is externalized not as narrative, but as a visible network of nodes and connections. The primary output is not an answer, but a structure. Tools like ClipMind take content from videos, PDFs, or chat threads and instantly render it as an editable visual hierarchy. This forces a different kind of engagement: hierarchical and relational thinking becomes mandatory.

The strengths of this model are orthogonal to those of chat. It provides a “god’s eye view” of a topic, revealing the whole landscape at once. Relationships between concepts are explicit, not implied. Perhaps most importantly, the structure is malleable. The user is not a passive recipient but an active editor. The AI provides the raw semantic material—the key concepts and phrases—but the user provides, and can continuously tweak, the architecture. This creates a true co-creation dynamic.

The evidence base for the efficacy of visual structuring is robust. A meta-analysis on the effect of mind mapping confirms its positive impact on teaching and learning. Studies in fields like medical education show mind maps significantly enhance knowledge retention and comprehension compared to traditional linear methods. The act of spatial organization leverages our brain’s innate capacity for spatial schemas and cognitive maps, aiding recall and pattern recognition in ways linear text cannot.

An AI mind map is like being given a set of building blocks and a suggested blueprint, then being handed the tools to rearrange them into a structure that makes sense to you.

This is not just about memory; it’s about creativity. Research indicates that mind mapping has a stronger impact on boosting creativity than conventional text-based training. By making the structure of ideas visible and editable, it creates a playground for insight, where gaps and connections become obvious.

Phase-Based Thinking: Matching the Tool to the Mental Job

The question, then, is not “which tool is better?” but “which tool is better for what?” Effective thinking is a multi-phase process, and cognitive ergonomics demands we fit the tool to the mental job. Framing this as a binary choice misses the point. The most powerful thinking emerges from a strategic loop between generation and structuring.

Use AI Chat for:

- Initial Exploration: Diving into an unknown topic with broad, open-ended questions.

- Deep Dives: Iteratively refining a single complex question or piece of code.

- Narrative Generation: Role-playing, storytelling, or drafting linear content.

- Specific Q&A: Getting a precise fact, definition, or procedural step.

Use AI Mind Maps for:

- Synthesis: Combining and making sense of information from multiple sources (a research paper, a webinar, and a chat thread).

- Planning & Outlining: Structuring a project, article, or product roadmap.

- Brainstorming: Generating and organizing divergent ideas to see thematic clusters.

- Knowledge Structuring: Creating a long-term reference map for a complex domain you need to understand and remember.

The magic happens in the workflow that connects them. Imagine this process:

- Gather with Chat: Use a chatbot to explore a topic, ask follow-up questions, and generate raw material and perspectives.

- Structure with a Map: Feed the key insights or even the entire conversation into a tool like ClipMind to generate an initial mind map. Suddenly, the linear dialogue is transformed into a spatial structure.

- Edit & See Gaps: Reorganize the map to fit your mental model. The act of dragging nodes will reveal connections you missed and, crucially, highlight gaps in your understanding.

- Return & Refine: Go back to the chat with specific, targeted questions born from the gaps you saw in your map.

This loop turns AI from an oracle into a cognitive partner. The chat generates; the map helps you understand; your understanding then guides smarter generation.

Beyond the Binary: The Integrated Cognitive Canvas

The dichotomy between linear chat and spatial map is, I believe, a temporary artifact of early tool design. The future of thinking tools lies not in choosing a side, but in dissolving the boundary. We need integrated environments that support fluid movement between narrative and spatial modes of thought.

Imagine an interface where, at any point in a chat conversation, you could pause and say, “Show me the map of this.” The underlying AI would extract the latent conceptual structure of the dialogue—the key entities, relationships, and hierarchies—and render it as an interactive mind map beside the chat. Conversely, you could click any node on a map and open a contextual chat pane to deepen, challenge, or expand that specific idea, with the AI fully aware of its place in the larger structure.

This vision aligns with the work of thinkers like Bret Victor, who advocates for “explorable explanations,” and Andy Matuschak, whose “orbital notes” emphasize creating persistent, interconnected knowledge structures. In such a system, AI’s role evolves from a content generator to a true cognitive partner, helping us see and manipulate the architecture of our own thoughts.

The goal is to build a workshop for the mind, where the tools bend to the shape of thought, not the other way around.

Thinking as a Craft, Tools as the Workshop

We stand at an inflection point. AI has given us engines of unprecedented generative power. The critical challenge is no longer access to information, but the ability to synthesize, structure, and truly own that information. Our tools shape this process at a fundamental level.

Chat interfaces excel at linear depth, providing the thread of a compelling narrative. Mind maps excel at relational breadth, providing the landscape in which that narrative resides. The ultimate measure of a thinking tool is not the intelligence of its output, but how it shapes and improves the user’s own intelligence, creativity, and understanding.

The final insight is this: often, the deepest thinking occurs not within a single tool, but in the act of translation—of taking ideas from the linear stream of a chat and wrestling them into the spatial structure of a map, or of using the questions born from a map to fuel a more focused dialogue. Our tools should facilitate this translation, not lock us into a single mode.

So, experiment. Be mindful. Use chat to generate and explore. Use maps to understand and synthesize. Notice how each tool changes the texture of your thinking. The craft of thought is honed by choosing the right tool for the right phase, and by learning to build bridges between them. In that deliberate practice, we don’t just use AI to think; we learn to think better ourselves.